Anthropomorphic Intelligence

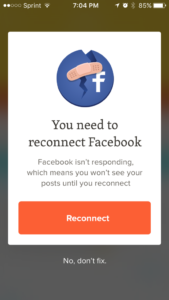

Recently, I opened Timehop for my daily dose of wibbly-wobbly, timey-wimey memories, and the app told me that I need to sign into Facebook because hey, the Facebook connection isn’t working anymore. Below the message, there was a huge, brightly colored “Reconnect” button and below that, a less obvious, smaller “No, don’t fix” link. As I tapped “No, don’t fix,” I said to the app, “Knock it off, Timehop, I don’t want to sign into Facebook – I deactivated that account four months ago. Leave me alone!”

Recently, I opened Timehop for my daily dose of wibbly-wobbly, timey-wimey memories, and the app told me that I need to sign into Facebook because hey, the Facebook connection isn’t working anymore. Below the message, there was a huge, brightly colored “Reconnect” button and below that, a less obvious, smaller “No, don’t fix” link. As I tapped “No, don’t fix,” I said to the app, “Knock it off, Timehop, I don’t want to sign into Facebook – I deactivated that account four months ago. Leave me alone!”

During the five seconds while I had this thought, I was treating the software application like a human person. I reacted to it with emotions: annoyance, anger, indignation. I felt it should know better. I felt it wasn’t communicating well nor showing any signs that it understood my needs.

After those five seconds passed, the rational part of my brain kicked in, and I thought, “Oh, yeah. This is software. This is code written by a human person. It’s been asking me to sign into Facebook regularly for a few weeks now. I can imagine that maybe a scheduled cron job checks for conditions (Facebook connection exists, credentials don’t work) and runs an alert method. Okay, sure.”

I suspect this experience is common for those of us close to the technology industry: we have an emotional, human-relational reaction to something a software app does, as though it were a person, but then we remember what the app really is and reason out a theory of how it was programmed to work. But I wonder about the folks who don’t think about, study, and work with software every day. It seems more likely that their initial five-second reaction remains unchallenged in their minds. Close your eyes and imagine a relative or friend outside the tech industry interacting with a software application and tell me if you hear anything like the following (you can open your eyes to keep reading):

“Why’d it decide to do that?”

“It’s just being cranky again.”

“Tell it to stop acting like that. Why is it so stupid?”

“This thing hates me.”

“That’s not what I wanted to do. Why won’t you listen to me!”

I’ve been reading Thinking, Fast and Slow by Daniel Kahneman since writing the original draft of this post, and I now see that the immediate response that personifies software is an act of System 1, while it is System 2 that challenges that reaction by considering the underlying code. In this case, if a person lacks knowledge of and experience with code, their System 2 is going to accept System 1’s initial understanding as good enough and move on.

Testing for Anthropomorphic Interaction

So why should I care about this as a software tester? Testers should care about this because we can’t stop users from reacting to software like it is human. It happens. It’s going to keep happening. Kahneman writes:

“Your mind is ready and even eager to identify agents, assign them personality traits and specific intentions, and view their actions as expressing individual propensities.”

If we keep this in mind, I believe it can help us spot threats to quality in the software we test. If I were testing the Timehop app, for example, I might say, “The system successfully prompts the user to fix a service connection every X number of days that the system cannot connect. Requirement satisfied. Test passed!” But if I remember that the user will be interacting with the system as though it were human, I allow myself to think, “Hey, what happens if the user taps ‘No, don’t fix’ after five consecutive prompts? Are they going to get annoyed or angry or confused? Are they going to think the system is an inconsiderate pest?”

Depending on the context, I would likely report this as a threat to quality. The machine is interacting with the user as though they are another machine (“if this, then this, loop”), while the user is interacting with the machine as though it is another person (“I told you no!”). I think that we should strive to meet the user’s expectations whenever reasonable. In this case, I would report that the system doesn’t change its behavior after repeated “No, don’t fix” clicks, which violates the user’s expectations of a person-like interaction. If pressed, I might suggest that after three consecutive “No, don’t fix” clicks the application offer to change the user’s connection settings and turn off the Facebook connection – or at least disable the alerts.

I said that we should strive to meet the user’s expectations “whenever reasonable” – there are of course limits to how far software developers should go to make their applications act more like a person. For example, extreme attempts are likely to fall flat and disappoint users even more so – hello, Siri. For another, there can emerge a feedback loop wherein as software acts more like a person, users expect all software to act more like a person, and become even more disappointed with shortcomings. I’m concerned that misguided journalists are already feeding this with articles that provide only a shallow understanding of AI, its promise, and its shortcomings.

In the end, we can’t prevent users from anthropomorphizing software. But as testers we can perhaps anticipate and identify the ways it might threaten quality.

Users have anthropomorphised their complex tools since time immemorial – think of ships, cars and steam engines. I’m surprised that we so often ignore this effect for software. I suppose the difference is that whereas in the past, it was the engineer who worked on the steam engine or the ship who did the anthropomorphising, whereas as knowledge-based professionals working with something as abstract as code, we’re all supposed to be above that and the expectation of human reactions is restricted to “mere”, untutored users.

We may be deluding ourselves. Who is to say that we can’t be effective developers or testers until we START anthropomorphising our systems a bit? After all, they are designed (ultimately) to work with humans. Even an API has to produce data flows that will eventually feature in some machine/human interaction.